Private Kubernetes Cluster with kops

I’ve scattered the web looking for a simple tutorial on how to set up kops, with a private API, private DNS zone, and private network topology.

Most of the blog posts I’ve come across go with the default configuration or just tweak it at a superficial level.

That’s the motivation for this post, we are going to set up a highly available Kubernetes cluster on AWS using kops and terraform, the cluster will have an unexposed API, private nodes (all running in private subnets), and a private DNS zone.

Terraform setup

We’ll use infrastructure as code best practices to make sure our setup is repeatable using terraform.

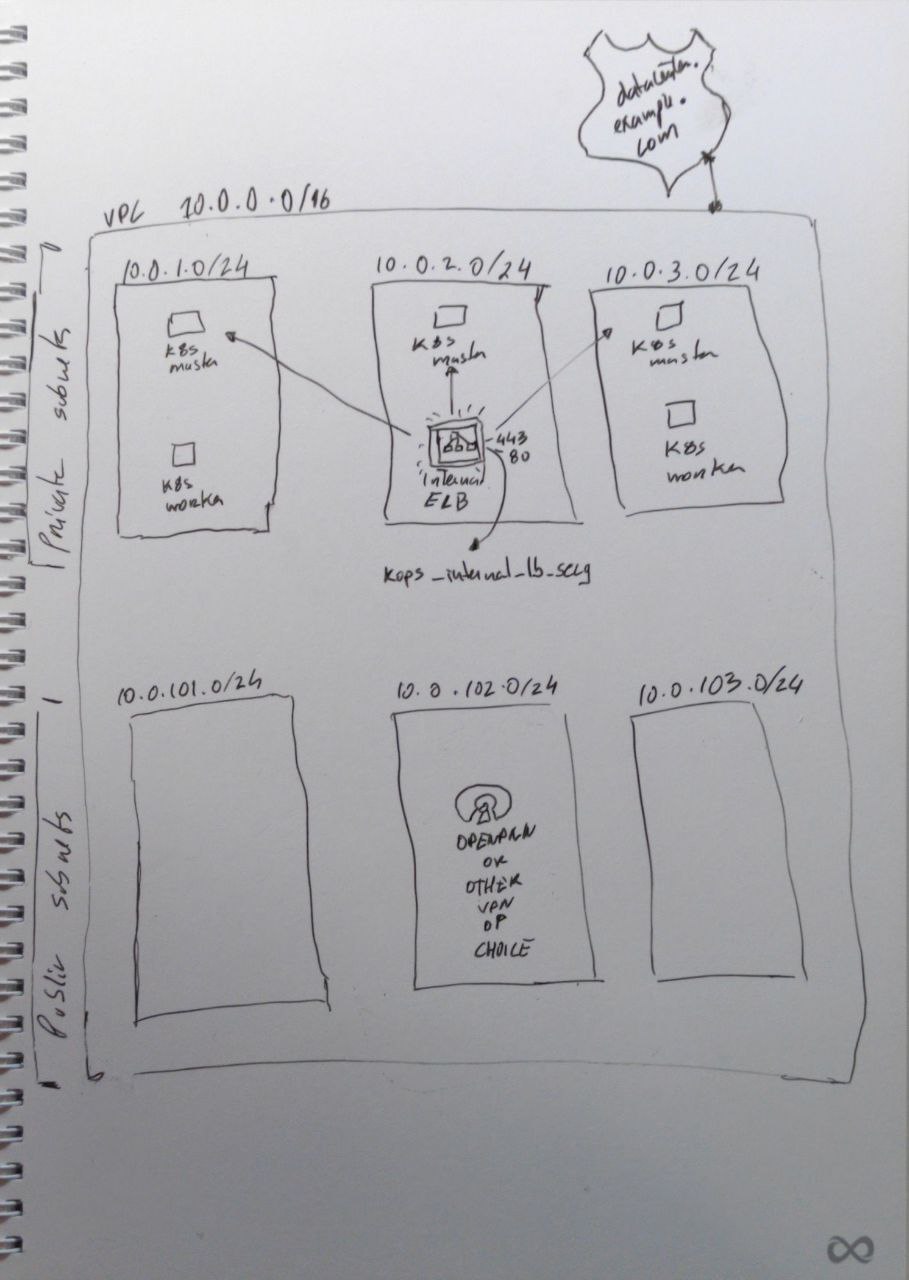

Bellow is a bad drawing that tries to explain how the infrastructure will look like. I know of the existence of draw.io, but we are trying to be edgy here, ok!? 🔥

Fig.1 - VPC setup to help explain the terraform configuration

First of all, we’ll set up a VPC, private subnets where our nodes will run, public subnets (those will be necessary to run ELBs for Ingress and Services of type LoadBalancer), a private DNS zone, an s3 bucket to store the kops state and we’ll also create a security group that we’ll attach to the internal API ELB. Here is the code:

# main.tf

provider "aws" {

region = "us-west-2"

}

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "4.32.0"

}

}

}

data "aws_region" "current" {}

locals {

region = data.aws_region.current.name

azs = formatlist("%s%s", local.region, ["a", "b", "c"])

domain = "datacenter.example.com"

cluster_name = "test.datacenter.example.com"

}

module "zones" {

source = "terraform-aws-modules/route53/aws//modules/zones"

version = "2.9.0"

zones = {

(local.domain) = {

vpc = [

{

vpc_id = module.vpc.vpc_id

}

]

}

}

}

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "3.16.0"

name = format("%s-vpc", local.cluster_name)

cidr = "10.0.0.0/16"

azs = local.azs

private_subnets = ["10.0.1.0/24", "10.0.2.0/24", "10.0.3.0/24"]

public_subnets = ["10.0.101.0/24", "10.0.102.0/24", "10.0.103.0/24"]

enable_nat_gateway = true

single_nat_gateway = true

one_nat_gateway_per_az = false

enable_dns_support = true

enable_dns_hostnames = true

private_subnet_tags = {

"kubernetes.io/role/internal-elb" = 1

}

public_subnet_tags = {

"kubernetes.io/role/elb" = 1

}

tags = {

Application = "network"

format("kubernetes.io/cluster/%s", local.cluster_name) = "shared"

}

}

module "s3_bucket" {

source = "terraform-aws-modules/s3-bucket/aws"

version = "3.4.0"

bucket = "kops-state-datacenter"

acl = "private"

versioning = {

enabled = true

}

}

module "kops_internal_lb_secg" {

source = "terraform-aws-modules/security-group/aws"

version = "4.13.1"

name = "Security group for internal ELB created by kops"

vpc_id = module.vpc.vpc_id

ingress_cidr_blocks = [module.vpc.vpc_cidr_block]

ingress_rules = ["https-443-tcp", "http-80-tcp"]

}

We’ll need to pick up some information about our infrastructure to template our kops configuration file.

# outputs.tf

output "region" {

value = local.region

}

output "vpc_id" {

value = module.vpc.vpc_id

}

output "vpc_cidr_block" {

value = module.vpc.vpc_cidr_block

}

output "public_subnet_ids" {

value = module.vpc.public_subnets

}

output "public_route_table_ids" {

value = module.vpc.public_route_table_ids

}

output "private_subnet_ids" {

value = module.vpc.private_subnets

}

output "private_route_table_ids" {

value = module.vpc.private_route_table_ids

}

output "default_security_group_id" {

value = module.vpc.default_security_group_id

}

output "nat_gateway_ids" {

value = module.vpc.natgw_ids

}

output "availability_zones" {

value = local.azs

}

output "kops_s3_bucket_name" {

value = module.s3_bucket.s3_bucket_id

}

output "k8s_api_http_security_group_id" {

value = module.kops_internal_lb_secg.security_group_id

}

output "cluster_name" {

value = local.cluster_name

}

output "domain" {

value = local.domain

}

By now, you can run terraform apply and bootstrap the base infrastructure.

Templating our kops manifest

# kops.tmpl

apiVersion: kops/v1alpha2

kind: Cluster

metadata:

name: {{.cluster_name.value}}

spec:

api:

loadBalancer:

type: Internal

additionalSecurityGroups: ["{{.k8s_api_http_security_group_id.value}}"]

dnsZone: {{.domain.value}}

authorization:

rbac: {}

channel: stable

cloudProvider: aws

configBase: s3://{{.kops_s3_bucket_name.value}}/{{.cluster_name.value}}

# Create one etcd member per AZ

etcdClusters:

- etcdMembers:

{{range $i, $az := .availability_zones.value}}

- instanceGroup: master-{{.}}

name: {{. | replace $.region.value "" }}

{{end}}

name: main

- etcdMembers:

{{range $i, $az := .availability_zones.value}}

- instanceGroup: master-{{.}}

name: {{. | replace $.region.value "" }}

{{end}}

name: events

iam:

allowContainerRegistry: true

kubernetesVersion: 1.25.2

masterPublicName: api.{{.cluster_name.value}}

networkCIDR: {{.vpc_cidr_block.value}}

kubeControllerManager:

clusterCIDR: {{.vpc_cidr_block.value}}

kubeProxy:

clusterCIDR: {{.vpc_cidr_block.value}}

networkID: {{.vpc_id.value}}

kubelet:

anonymousAuth: false

networking:

amazonvpc: {}

nonMasqueradeCIDR: {{.vpc_cidr_block.value}}

subnets:

# Public (utility) subnets, one per AZ

{{range $i, $id := .public_subnet_ids.value}}

- id: {{.}}

name: utility-{{index $.availability_zones.value $i}}

type: Utility

zone: {{index $.availability_zones.value $i}}

{{end}}

# Private subnets, one per AZ

{{range $i, $id := .private_subnet_ids.value}}

- id: {{.}}

name: {{index $.availability_zones.value $i}}

type: Private

zone: {{index $.availability_zones.value $i}}

egress: {{index $.nat_gateway_ids.value 0}}

{{end}}

topology:

dns:

type: Private

masters: private

nodes: private

---

# Create one master per AZ

{{range .availability_zones.value}}

apiVersion: kops/v1alpha2

kind: InstanceGroup

metadata:

labels:

kops.k8s.io/cluster: {{$.cluster_name.value}}

name: master-{{.}}

spec:

machineType: t3.medium

maxSize: 1

minSize: 1

role: Master

nodeLabels:

kops.k8s.io/instancegroup: master-{{.}}

subnets:

- {{.}}

---

{{end}}

apiVersion: kops/v1alpha2

kind: InstanceGroup

metadata:

labels:

kops.k8s.io/cluster: {{.cluster_name.value}}

name: nodes

spec:

machineType: t3.medium

maxSize: 2

minSize: 2

role: Node

nodeLabels:

kops.k8s.io/instancegroup: nodes

subnets:

{{range .availability_zones.value}}

- {{.}}

{{end}}

NOTE: Notice that the

api.loadBalanceris of typeInternaland thatadditionalSecurityGroupsis defined. Also, notice thednsZone. For networking we choose useamazonvpc(Find more networking models here). Finally, take a look at thetopologystanza, where every keydns,masters, andnodesare defined asprivate.

Now, to template our kops file let’s take a look at the following shell commands.

What do we want to do? We want to create a yaml file with the values that will be used to feed our kops.tmpl file. We also want to initialize some variables that will facilitate the next kops commands (like KOPS_CLUSTER_NAME).

TF_OUTPUT=$(terraform output -json | yq -P)

KOPS_VALUES_FILE=$(mktemp /tmp/tfout-"$(date +"%Y-%m-%d_%T_XXXXXX")".yml)

KOPS_TEMPLATE="kops.tmpl"

KOPS_CLUSTER_NAME=$(echo $TF_OUTPUT | yq ".cluster_name.value")

KOPS_STATE_BUCKET=$(echo $TF_OUTPUT | yq ".kops_s3_bucket_name.value")

echo $TF_OUTPUT > $KOPS_VALUES_FILE

Once you have the above variables loaded you can issue the kops commands.

kops toolbox template --name ${KOPS_CLUSTER_NAME} --values ${KOPS_VALUES_FILE} --template ${KOPS_TEMPLATE} --format-yaml > cluster.yaml

kops replace --state s3://${KOPS_STATE_BUCKET} --name ${KOPS_CLUSTER_NAME} -f cluster.yaml --force

Now, you have two options, you can either let kops create your cluster, like so:

Let kops create the cluster

kops update cluster --state s3://${KOPS_STATE_BUCKET} --name ${KOPS_CLUSTER_NAME} --yes

Let kops create the cluster terraform code

Or you can let kops create the terraform definition for you, that you can then apply.

kops update cluster --state s3://${KOPS_STATE_BUCKET} --name ${KOPS_CLUSTER_NAME} --out=. --target=terraform

The above command should create a kubernetes.tf file, you can now run terraform apply to bootstrap your kubernetes cluster.

Make sure that the cluster is healthy

Give your cluster a few minutes to bootstrap after you issued the creation command.

NOTE: Now, here is something to take into account, your kubernetes master nodes are now running inside your private subnets, and the ELB created by kops is internal (as intended), plus the ELB security group that we created only accepts traffic from inside the VPC. So, in order to be able to talk to the API, and issue

kopscommands you’ll either need a bastion host, or a VPN service running in your VPC. Setting up a VPN server is outside the scope of this blog post. AWS has a nice blog post about it.

So, assuming that you have a VPN service running in your VPC, connect to it and make sure that your client can resolve DNS.

You can do that by running the following.

dig +short api.test.datacenter.example.com

Then let kops set up your kubect context aproprietely.

kops export kubeconfig --admin --state s3://${KOPS_STATE_BUCKET}

Then run the cluster validation command.

kops validate cluster --wait 10m --state s3://${KOPS_STATE_BUCKET}

Your cluster should be now operational.

Delete the resources

If you are just playing around, don’t forget to delete the infrastructure once your done!

kops delete cluster --name ${KOPS_CLUSTER_NAME} --state s3://${KOPS_STATE_BUCKET} --yes

terraform destroy