Sonic APIs with FastAPI, SQLModel, FastAPI-crudrouter and testcontainers

In this post, we’ll take a look at three cool FastAPI components that will allow you to write self-documented endpoints with zero boilerplate code. At the end of the post, we’ll also take a peak at an opinionated way of using testcontainers to perform integration tests on your service.

Dependency overview

FastAPI - python framework. It’ll automatically generate your service’s OpenAPI schema based on your pydantic models and routes. Also, FastAPI allows you to use either async or sync routes without enforcing them.

SQLModel - built on top of sqlalchemy and pydantic, by the same striking creator of FastAPI, SQLModel allows you to define your database models (ORM) on top of your pydantic models. Having data validation and model definitions in the same place allows us to write less code.

FastAPI-crudrouter - automatically generates CRUD routes for you based on your pydantic models and Backends / ORMs. (In this post we’ll take a look at SQLAlchemy since that’s what SQLModel uses by default).

Testcontainers - launch containers in order to preform integration testing.

Setup

# requirements.txt

fastapi==0.70.0

uvicorn[standard]==0.15.0

sqlmodel==0.0.4

psycopg2==2.9.1

psycopg2-binary==2.9.1

fastapi-crudrouter==0.8.4

testcontainers==3.4.2

Just mkdir and run the following to set up your directory

virtualenv -p python3.8 -v venv

source venv/bin/activate

pip3 install -r requirements.txt

Application code

Take a look at the code below and the comments under it.

# main.py

import os

from datetime import time

from fastapi import FastAPI

from fastapi_crudrouter import SQLAlchemyCRUDRouter

from sqlmodel import Field, Session, SQLModel, create_engine

class DemoIn(SQLModel):

description: str

init: time

end: time

class Demo(DemoIn, table=True):

id: int = Field(primary_key=True)

DATABASE: str = os.getenv("DATABASE", "db")

DB_USER: str = os.getenv("DB_USER", "user")

DB_PASSWORD: str = os.getenv("DB_PASSWORD", "password")

DB_HOST: str = os.getenv("DB_HOST", "localhost")

DB_PORT: str = os.getenv("DB_PORT", "5432")

SQLALCHEMY_DATABASE_URL = f"postgresql://{DB_USER}:{DB_PASSWORD}@{DB_HOST}:{DB_PORT}/{DATABASE}"

engine = create_engine(SQLALCHEMY_DATABASE_URL)

# Dependency

def get_db():

db = Session(engine)

try:

yield db

finally:

db.close()

demo_router = SQLAlchemyCRUDRouter(

schema=Demo,

create_schema=DemoIn,

db_model=Demo,

db=get_db

)

app = FastAPI()

app.include_router(demo_router)

@app.on_event("startup")

async def startup_event():

SQLModel.metadata.create_all(engine)

# Run the code

docker run --name postgres -p 5432:5432 -e POSTGRES_USER=user -e POSTGRES_PASSWORD=password -e POSTGRES_DB=db -d postgres:13

uvicorn main:app --reload

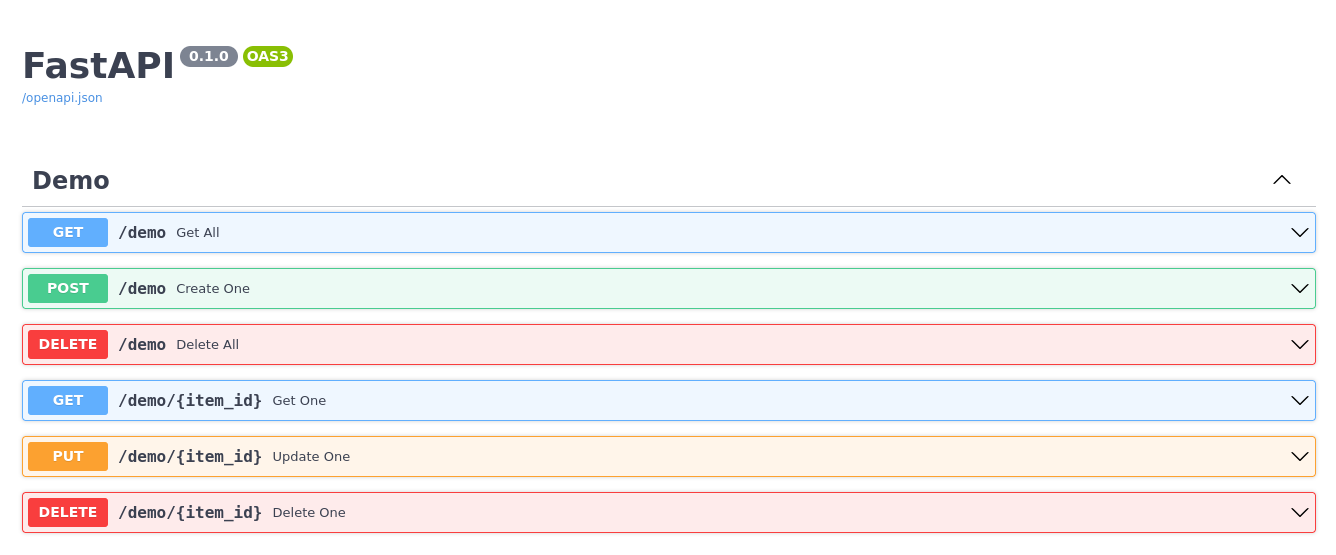

Once we have our database container up, we can launch an uvicorn worker and access our service’s /docs endpoint. We’ll find 6 CRUD routes provided by fastapi-crudrouter, automatically generated. We can then use the Swagger UI in order to trigger our endpoints and make sure everything is working as expected.

On main.py we create two classes that inherit from SQLModel, DemoIn that inherits directly and Demo because it inherits from DemoIn. SQLModel takes care of SQLAlchemy and Pydantic for us, under the hood. We then create an instance of SQLAlchemyCRUDRoute. Provide our db session and db_model (defining table=True makes tells SQLModel to map that class as a table in your database). Provide our schema and optionally create_schema.

Taking a look at the contents of our Postgres container we can see that a table named demo was created with the proper fields (id, description, init and end).

~/tmp » docker exec -it postgres psql -d db -U user -c "\d+ demo"

Table "public.demo"

Column | Type | Collation | Nullable | Default | Storage | Stats target | Description

-------------+------------------------+-----------+----------+----------------------------------+----------+--------------+-------------

description | character varying | | not null | | extended | |

init | time without time zone | | not null | | plain | |

end | time without time zone | | not null | | plain | |

id | integer | | not null | nextval('demo_id_seq'::regclass) | plain | |

Indexes:

"demo_pkey" PRIMARY KEY, btree (id)

"ix_demo_description" btree (description)

"ix_demo_end" btree ("end")

"ix_demo_id" btree (id)

"ix_demo_init" btree (init)

Access method: heap

Taking a closer look we’ll see that all the columns are indexed with btree, interesting 🤔. Turns out this is actually a bug which should be on its way to getting fixed (SQLModel is only in the 0.0.4 version at the time of writing).

Testing

# integration_test.py

import pytest

from fastapi.testclient import TestClient

from sqlalchemy.orm.session import Session

from sqlmodel import Session, SQLModel, create_engine

from testcontainers.core.container import DockerContainer

from testcontainers.core.waiting_utils import wait_for_logs

from main import app, get_db

POSTGRES_IMAGE = "postgres:13"

POSTGRES_USER = "postgres"

POSTGRES_PASSWORD = "test_password"

POSTGRES_DATABASE = "test_database"

POSTGRES_CONTAINER_PORT = 5432

@pytest.fixture(scope="function")

def postgres_container() -> DockerContainer:

"""

Setup postgres container

"""

postgres = DockerContainer(image=POSTGRES_IMAGE) \

.with_bind_ports(container=POSTGRES_CONTAINER_PORT) \

.with_env("POSTGRES_PASSWORD", POSTGRES_PASSWORD) \

.with_env("POSTGRES_DB", POSTGRES_DATABASE)

with postgres:

wait_for_logs(

postgres, r"UTC \[1\] LOG: database system is ready to accept connections", 10)

yield postgres

@pytest.fixture(scope="function")

def http_client(postgres_container: DockerContainer):

def get_db_override() -> Session:

url = f"postgresql://{POSTGRES_USER}:{POSTGRES_PASSWORD}@{postgres_container.get_container_host_ip()}:{postgres_container.get_exposed_port(POSTGRES_CONTAINER_PORT)}/{POSTGRES_DATABASE}"

engine = create_engine(url)

SQLModel.metadata.create_all(engine)

with Session(engine) as session:

yield session

app.dependency_overrides[get_db] = get_db_override

with TestClient(app) as client:

yield client

app.dependency_overrides.clear()

# Test our CRUD routes using the http client provided by FastApi

def test_demo_crud(http_client: TestClient):

post_resp = http_client.post("/demo", json={

"description": "Finally free, found the God in me, And I want you to see, I can walk on water 🌬",

"init": "10:00:00",

"end": "18:00:00",

})

assert post_resp.status_code == 200

get_resp = http_client.get("/demo/1")

assert get_resp.status_code == 200

# Let's transform our json response into a Demo object and preform some validations 🤝

from main import Demo

demo = Demo(**get_resp.json())

assert demo.id == 1

assert demo.end > demo.init

assert "God" in demo.description

assert "Enemy" not in demo.description

Above we have integration testing for our service. This is an opinionated way on how to structure your tests using, pytest fixtures, testcontainers, FastAPI’s TestClient.

First of all, we define a function fixture postgres_container, this function is responsible for launching our postgres containers and making sure that they are ready before being yielded (notice the wait_for_logs call).

We then define http_client (another function fixture), and we provide postgres_container as a dependency for it (notice the dependency injection http_client: TestClient), we could use the same pattern to inject other dependencies needed for our service, think blob storage containers, containers containing some sort of message queuing system, etc. Notice how we override get_db with get_db_override, this is crucial so that our http client knows where to point to in order to reach our postgres container (Notice that each container is exposing a different port on the host machine).

With this setup, each of our tests that receive http_client as a dependency will receive their own postgres container and http client, leaving them totally isolated from other tests. test_demo_crud serves as a dummy example for us to understand how to interact with our service.

Conclusion

I hope this post was useful for you. We took a look at a spicy combination of libraries/framworks that allow for really quick development and testing. I wouldn’t say that this stack is the best choice for production critical systems, but it might be useful in many scenarios, take your conclusions 🤓.